git clone https://github.com/nvidia-cosmos/cosmos-predict2.git

cd cosmos-predict2

conda create -n cosmos_nvidia -y python==3.11.3

conda activate cosmos_nvidia

pip install pandas pydantic

pip install timm>=1.0.17

pip install aiofiles==23.2.1

pip install matplotlib

pip install torch==2.6.0 torchvision==0.21.0 torchaudio==2.6.0 --index-url https://download.pytorch.org/whl/cu124

pip install https://github.com/kingbri1/flash-attention/releases/download/v2.7.4.post1/flash_attn-2.7.4.post1+cu124torch2.6.0cxx11abiFALSE-cp311-cp311-win_amd64.whl

pip install xformers==0.0.29.post3 --index-url=https://download.pytorch.org/whl/cu124

pip install https://github.com/woct0rdho/triton-windows/releases/download/v3.2.0-windows.post9/triton-3.2.0-cp311-cp311-win_amd64.whl

pip install huggingface_hub[hf_xet]

pip install https://github.com/woct0rdho/SageAttention/releases/download/v2.1.1-windows/sageattention-2.1.1+cu126torch2.6.0-cp311-cp311-win_amd64.whl

pip install "huggingface_hub[cli]"

git clone https://github.com/NVIDIA/Megatron-LM.git

cd Megatron-LM

pip install --upgrade diffusers

pip install peft>=0.17.0

이제 setup.py의 내용을 다음과 같이 수정

import subprocess

import sys

from setuptools import Extension, setup

if sys.platform == 'win32':

# Windows (MSVC)용 컴파일 옵션

compiler_args = ['/O2', '/W3', '/std:c++17']

else:

# Linux/macOS (GCC/Clang)용 컴파일 옵션 (원본)

compiler_args = ["-O3", "-Wall", "-std=c++17"]

setup_args = dict(

ext_modules=[

Extension(

"megatron.core.datasets.helpers_cpp",

sources=["megatron/core/datasets/helpers.cpp"],

language="c++",

extra_compile_args=(

subprocess.check_output(["python", "-m", "pybind11", "--includes"])

.decode("utf-8")

.strip()

.split()

)

+ ["-O3", "-Wall", "-std=c++17"],

optional=True,

)

]

)

setup(**setup_args)

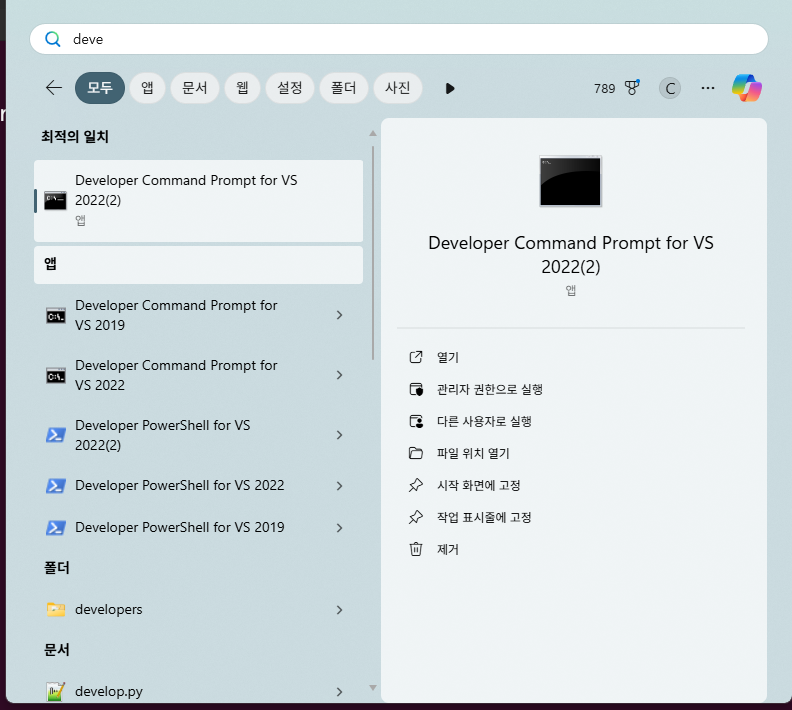

cl.exe를 실행시킨다.

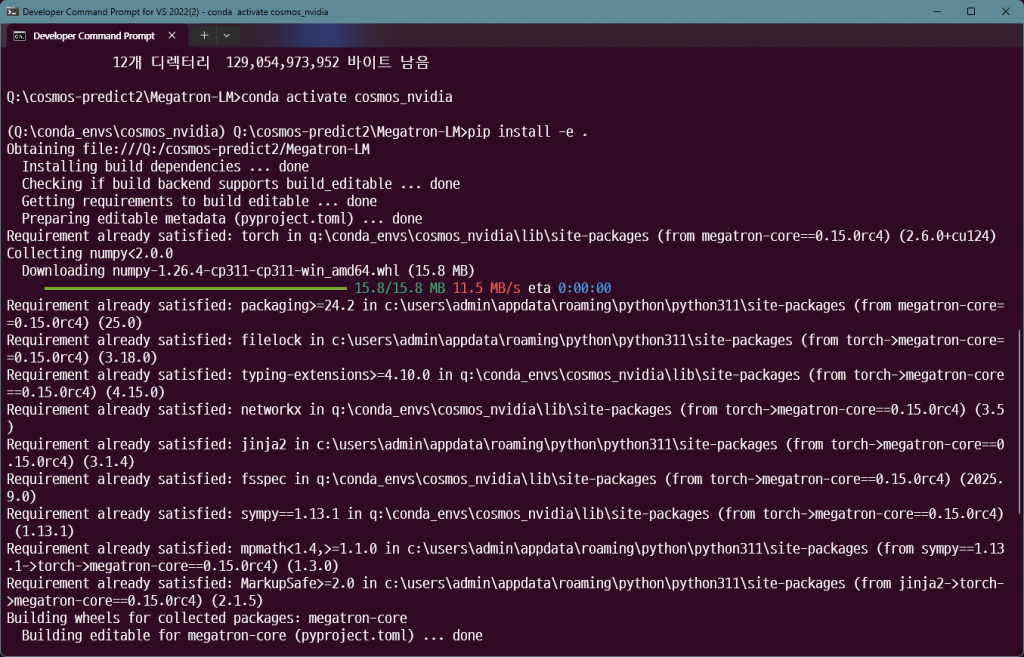

다음과 같이 conda 환경을 활성화 시키고

pip install -e .를 실행하면 된다.

python -c "import megatron.core; print('✅ Megatron-core imported successfully!')"로 테스트 하면 된다.

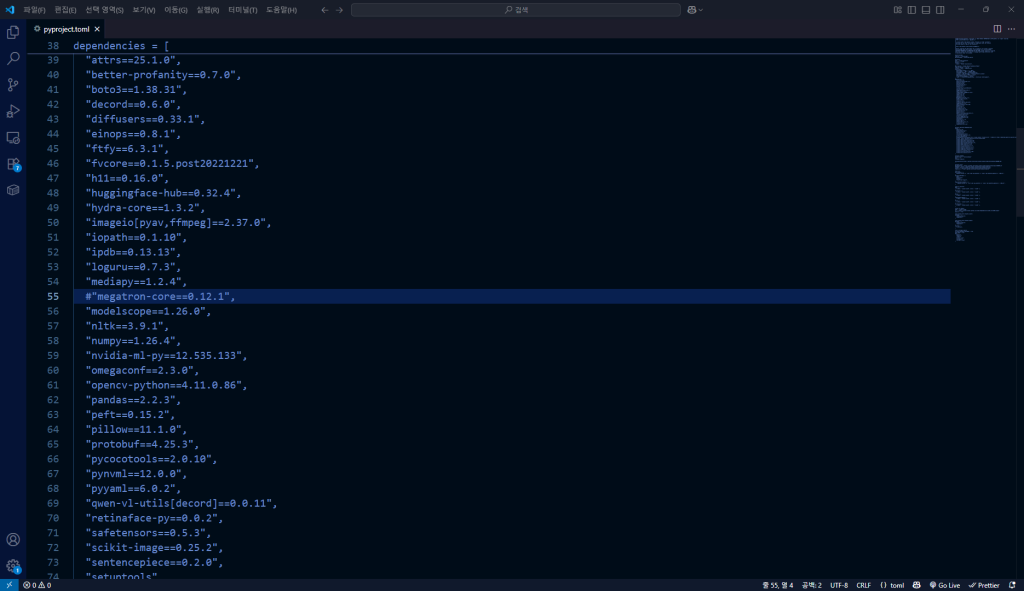

cosmos-predict2의 megatron-core를 주석처리한다.

그리고

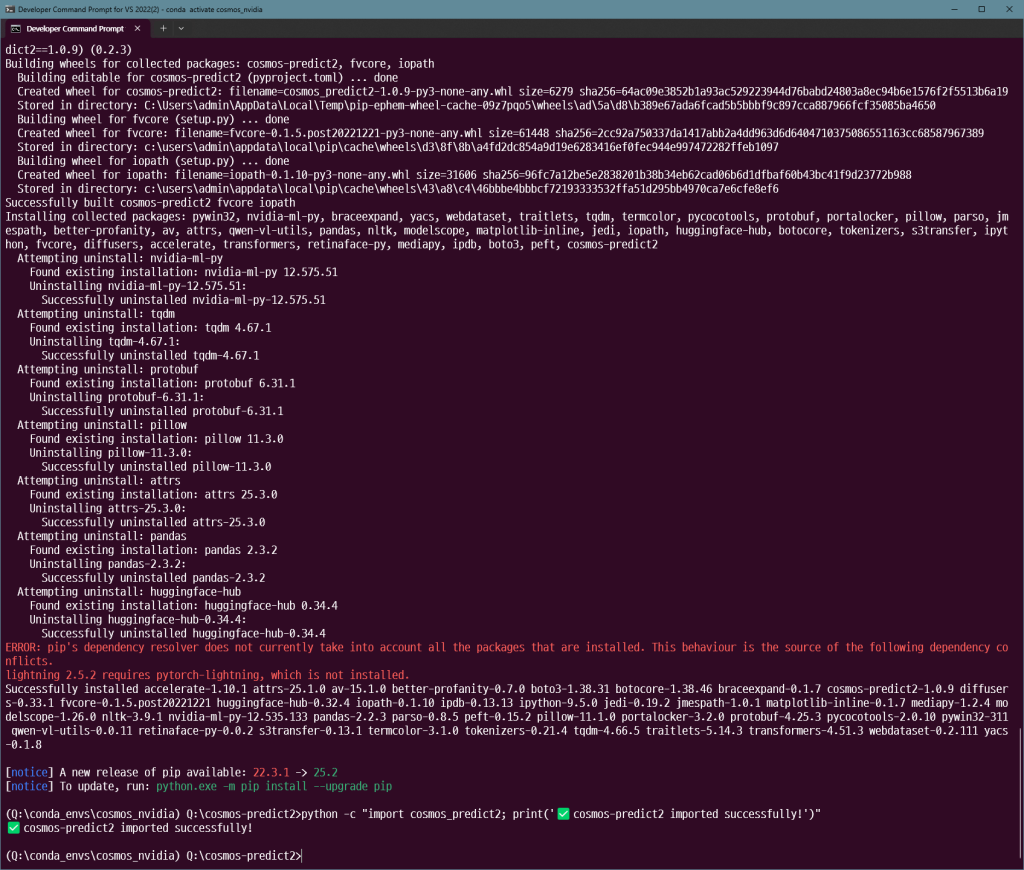

pip install -e .

무사히 설치된거 같다.

git lfs install

#download2B.py

from huggingface_hub import snapshot_download

# 저장소의 모든 파일을 다운로드합니다.

snapshot_download(

repo_id="nvidia/Cosmos-Predict2-2B-Video2World",

local_dir="./Cosmos-Predict2-2B-Video2World-models" # 다운로드 받을 로컬 폴더 지정

)

#pip 안될 경우

# pip 캐시 지우기

pip cache purge

# 기존 pip 설치 제거

python -m pip uninstall pip

#재설치

pip install https://files.pythonhosted.org/packages/b7/3f/945ef7ab14dc4f9d7f40288d2df998d1837ee0888ec3659c813487572faa/pip-25.2-py3-none-any.whl

# SPDX-FileCopyrightText: Copyright (c) 2025 NVIDIA CORPORATION & AFFILIATES. All rights reserved.

# SPDX-License-Identifier: Apache-2.0

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

[build-system]

requires = ["hatchling"]

build-backend = "hatchling.build"

[project]

name = "cosmos-predict2"

version = "1.0.9"

authors = [

{name = "NVIDIA Corporation"},

]

description = "Cosmos World Foundation Model"

requires-python = ">=3.10"

license = {text = "Apache-2.0"}

classifiers = [

"Development Status :: 4 - Beta",

"Environment :: GPU :: NVIDIA CUDA",

"Intended Audience :: Science/Research",

"License :: OSI Approved :: Apache Software License",

"Operating System :: POSIX :: Linux",

"Programming Language :: Python",

"Topic :: Scientific/Engineering :: Artificial Intelligence",

]

dependencies = [

"attrs==25.1.0",

"better-profanity==0.7.0",

"boto3==1.38.31",

"decord==0.6.0",

#"diffusers==0.33.1",

"diffusers==0.35.1",

"einops==0.8.1",

"ftfy==6.3.1",

"fvcore==0.1.5.post20221221",

"h11==0.16.0",

#"huggingface-hub==0.32.4",

"huggingface-hub==0.34.4",

"hydra-core==1.3.2",

"imageio[pyav,ffmpeg]==2.37.0",

"iopath==0.1.10",

"ipdb==0.13.13",

"loguru==0.7.3",

"mediapy==1.2.4",

#"megatron-core==0.12.1",

"modelscope==1.26.0",

"nltk==3.9.1",

"numpy==1.26.4",

"nvidia-ml-py==12.535.133",

"omegaconf==2.3.0",

"opencv-python==4.11.0.86",

"pandas==2.2.3",

#"peft==0.15.2",

"peft==0.17.1",

"pillow==11.1.0",

"protobuf==4.25.3",

"pycocotools==2.0.10",

"pynvml==12.0.0",

"pyyaml==6.0.2",

"qwen-vl-utils[decord]==0.0.11",

"retinaface-py==0.0.2",

"safetensors==0.5.3",

"scikit-image==0.25.2",

"sentencepiece==0.2.0",

"setuptools",

"termcolor==3.1.0",

"tqdm==4.66.5",

"transformers==4.51.3",

"triton==3.2.0",

"webdataset==0.2.111",

]

[project.optional-dependencies]

cu126 = [

"apex==0.1.0",

"flash-attn==2.6.3",

"natten==0.21.0",

"torch==2.6.0",

"torchvision==0.21.0",

"transformer-engine==1.13",

# Torch dependencies

# Dependencies determined from `uv pip install "torch==2.6.0" --index-url https://download.pytorch.org/whl/cu126`

# Issue: https://github.com/astral-sh/uv/issues/14237

"nvidia-cublas-cu12==12.6.4.1",

"nvidia-cuda-cupti-cu12==12.6.80",

"nvidia-cuda-nvrtc-cu12==12.6.77",

"nvidia-cuda-runtime-cu12==12.6.77",

"nvidia-cudnn-cu12==9.5.1.17",

"nvidia-cufft-cu12==11.3.0.4",

"nvidia-curand-cu12==10.3.7.77",

"nvidia-cusolver-cu12==11.7.1.2",

"nvidia-cusparse-cu12==12.5.4.2",

"nvidia-cusparselt-cu12==0.6.3",

"nvidia-nccl-cu12==2.21.5",

"nvidia-nvjitlink-cu12==12.6.85",

"nvidia-nvtx-cu12==12.6.77",

]

[project.readme]

content-type = "text/markdown"

text = '''

# Cosmos-Predict2

[Documentation](https://github.com/nvidia-cosmos/cosmos-predict2/blob/main/README.md)

'''

[project.urls]

documentation = "https://github.com/nvidia-cosmos/cosmos-predict2/blob/main/README.md"

homepage = "https://research.nvidia.com/labs/dir/cosmos-predict2"

issues = "https://github.com/nvidia-cosmos/cosmos-predict2/issues"

repository = "https://github.com/nvidia-cosmos/cosmos-predict2"

[tool.uv]

environments = [

"python_version == '3.10' and sys_platform == 'linux' and platform_machine == 'x86_64'",

]

no-build-package = [

"apex",

"flash-attn",

"natten",

"transformer-engine",

]

required-environments = [

"python_version == '3.10' and sys_platform == 'linux' and platform_machine == 'x86_64'",

]

[tool.uv.sources]

apex = [

{ index = "cosmos-cu126", extra = "cu126" },

]

flash-attn = [

{ index = "cosmos-cu126", extra = "cu126" },

]

natten = [

{ index = "cosmos-cu126", extra = "cu126" },

]

transformer-engine = [

{ index = "cosmos-cu126", extra = "cu126" },

]

torch = [

{ index = "cosmos-cu126", extra = "cu126" },

]

torchvision = [

{ index = "cosmos-cu126", extra = "cu126" },

]

[[tool.uv.index]]

name = "cosmos-cu126"

url = "https://nvidia-cosmos.github.io/cosmos-dependencies/cu126_torch260/simple"

explicit = true

[tool.hatch.build.targets.sdist]

packages = [

"cosmos_predict2",

"imaginaire",

]

[tool.hatch.build.targets.wheel]

packages = [

"cosmos_predict2",

"imaginaire",

]

exclude = [

"*_test.py",

]

[tool.coverage.report]

include_namespace_packages = true

skip_empty = true

omit = [

"tests/*",

"legacy/*",

".venv/*",

"**/test_*.py",

"config.py",

"config-3.10.py"

]

git clone --branch main https://github.com/NVIDIA/TransformerEngine.git

cd TransformerEngine

pip install . --no-build-isolation

pip install --no-cache-dir torch pybind11 wheel_stub ninja wheel packaging "setuptools>=77.0.0"

set PYTHONUTF8=1

pip install jax==0.7.1

pip install jaxlib==0.7.1

pip install . --no-build-isolation

일단 아집을 버리고 윈도우는 아직 포팅이 안되서 나중에 시도해야겠다.

https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html

다음 링크를 참고한다.

docker run --rm --gpus all nvidia/cuda:12.1.0-base-ubuntu22.04 nvidia-smi

# 1. NVIDIA의 공식 CUDA 이미지를 기반으로 시작합니다.

FROM nvidia/cuda:12.1.0-base-ubuntu22.04

# 2. apt 패키지 관리자를 업데이트하고 빌드에 필요한 기본 도구와 Python을 설치합니다.

RUN apt-get update && apt-get install -y \

build-essential \

python3.11 \

python3-pip \

git \

&& rm -rf /var/lib/apt/lists/*

# 3. 작업 디렉토리를 설정합니다.

WORKDIR /workspace/TransformerEngine

# 4. 소스 코드를 컨테이너 안으로 복사합니다.

COPY . .

# 5. 소스코드 자체를 빌드하고 설치합니다.

# 이 과정에서 pyproject.toml에 명시된 의존성이 자동으로 설치됩니다.

RUN pip install .라는 도커파일을 작성하고

docker build -t te-dev .

docker run --gpus all --rm -v .:/workspace -v /workspace/.venv -it $(docker build -q .)